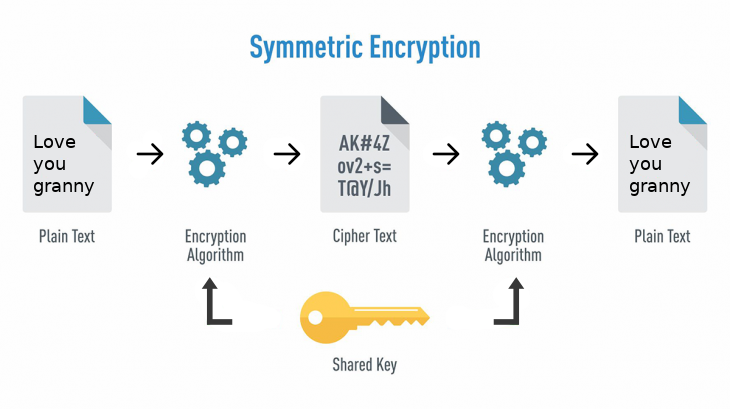

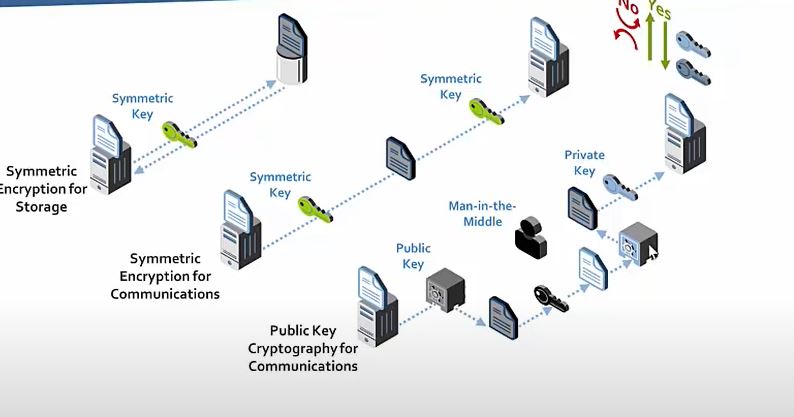

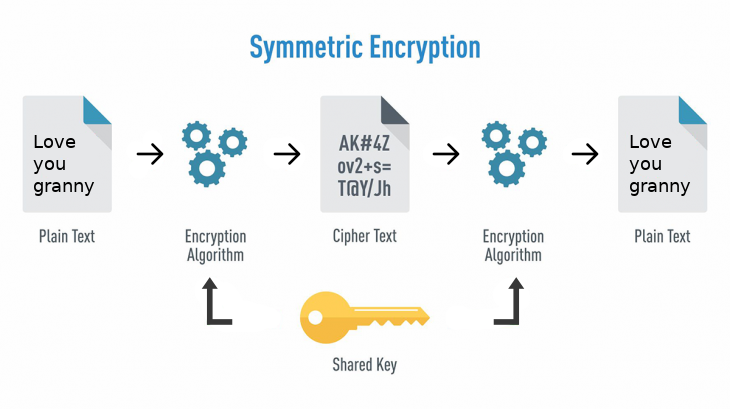

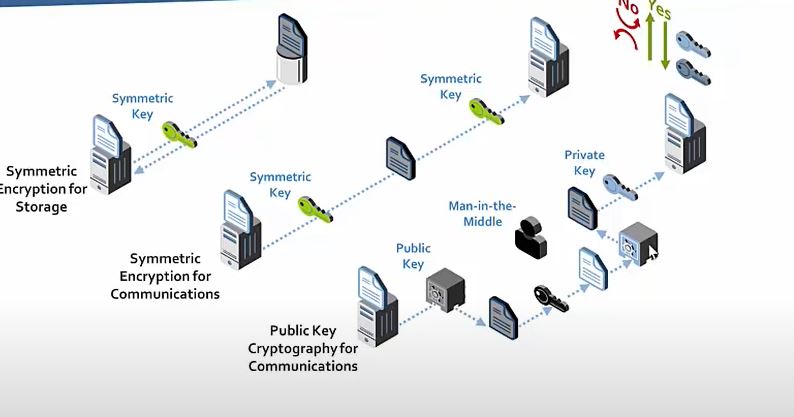

Symmetric Key Encryption (Private key Encryption)

- Same key is used between client and server to encryt and decrypt message

- Copy of key exist at both ends

- First time the copy of key generated should be sent securely to otherside.

- Public Key Encryption(Asymmetric Encrytion) is used to get the copy of the symmetric key for the first time

- Thoughts may arise if I could share key securely for the first time why don’t I use the same methodology but it is resource-intensive

- Advantage is The Encrytion and Decrytion is faster compared to Asymmetric Key Encryption

- Disadvantage is Key needs to be transferred for the first time and Key should be stored securely

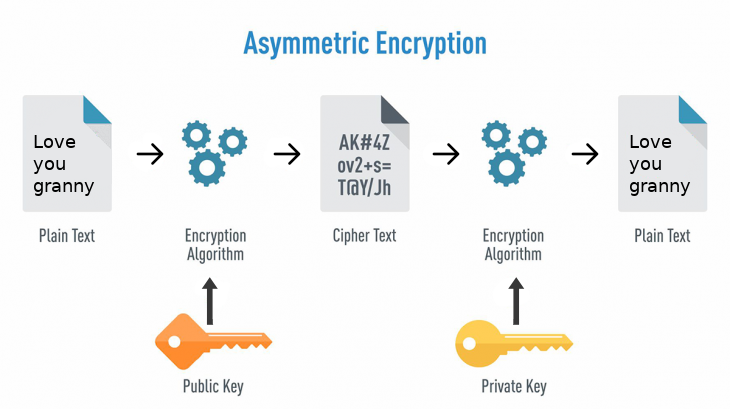

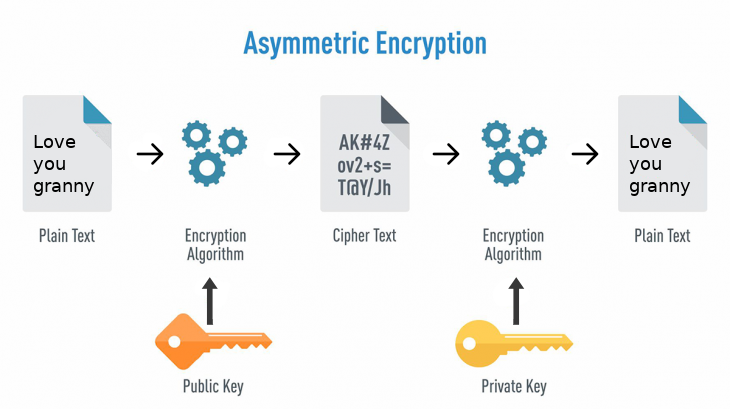

Asymmetric Key Encryption (Public key Encryption)

- Uses Public and Private Key

- Encrypted with one key and decrypted with other key. The Client uses public key to Encryt and Server uses private key to decrypt.

- Public key would be shared and to recieve encrypted message from client by public key

- This similar to Safe(Public Key) and Key(Private Key), When you send data it would be encrypted using public key similar to

safe which doesnot needs a key to lock. The Private key in server could unlock using the key it holds.

Man-In-Middle-Attack

- Man in middle generates his own public key which is available to client

- Client used public key provided by man in middle and sends his data

- Man in middle decrypts using his private key and makes a genuine request by encryting public key to server

- To address this issue certificates were used

Certificates

- The main purpose of the digital certificate is to ensure that the public key contained in the certificate belongs to the entity to which the

certificate was issued, in other words, to verify that a person sending a message is who he or she claims to be, and to then provide the message

receiver with the means to encode a reply back to the sender.

- This certificate could be cross checked and confirmed with certificate authoritiy

Certificate Authoritiy(CA)

- A CERTIFICATE AUTHORITY (CA) is a trusted entity that issues digital certificates, which are data files used to cryptographically link

an entity with a public key. Certificate authorities are a critical part of the internet’s public key infrastructure (PKI) because

they issue the Secure Sockets Layer (SSL) certificates that web browsers use to authenticate content sent from web servers.

- The role of the certificate authority is to bind a public key of Server to a name which could be verified by browser to make sure the response is from genuine server

Certificate Authority validates the identity of the certificate owner. The role of CA is trust.

- Certificates must contain Public Key which could be cross-checked with Certificate Authority(CA)

- CA would be mostly big companies like Symantec, google which acts as thirdparty to reassure trust.

- Self-Signed Certificate where you uses your own server and client to generate certificate. CA doesnot comes in play in Self-Signed Certificate

The above method may open door to man in middle attack

- Root Certificate is something which you would get when you use Self-Signed Certificate with your custom CA. Root Certificate would be available in

all client system which access data with server

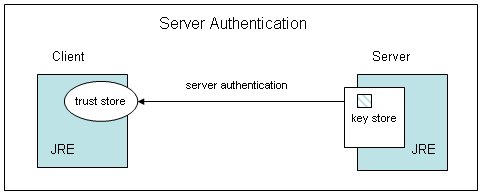

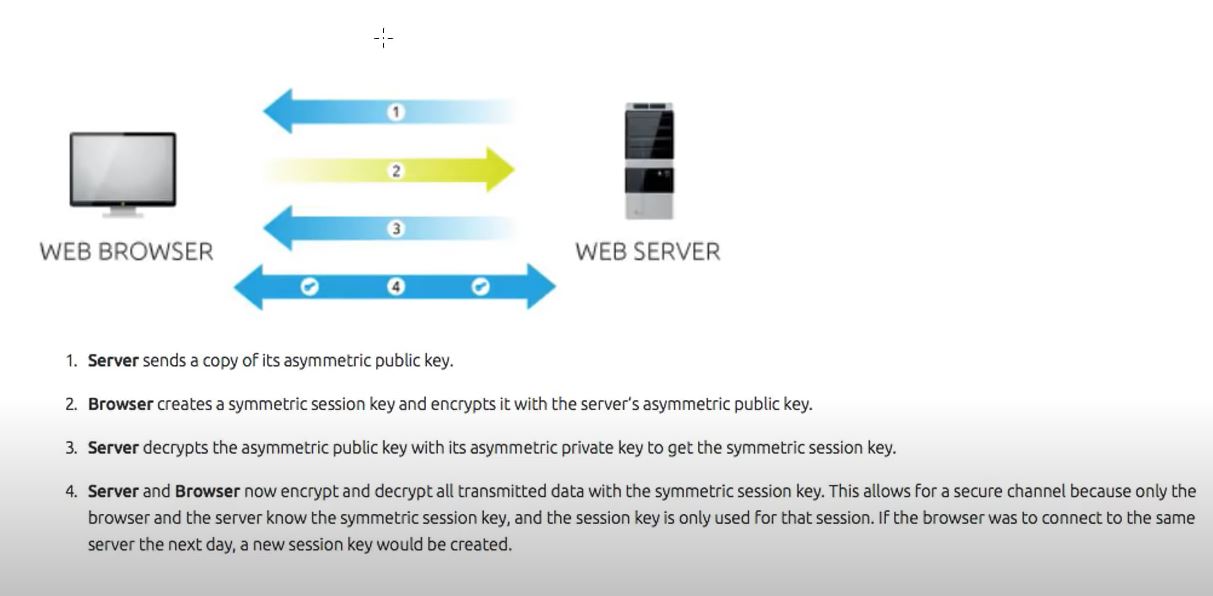

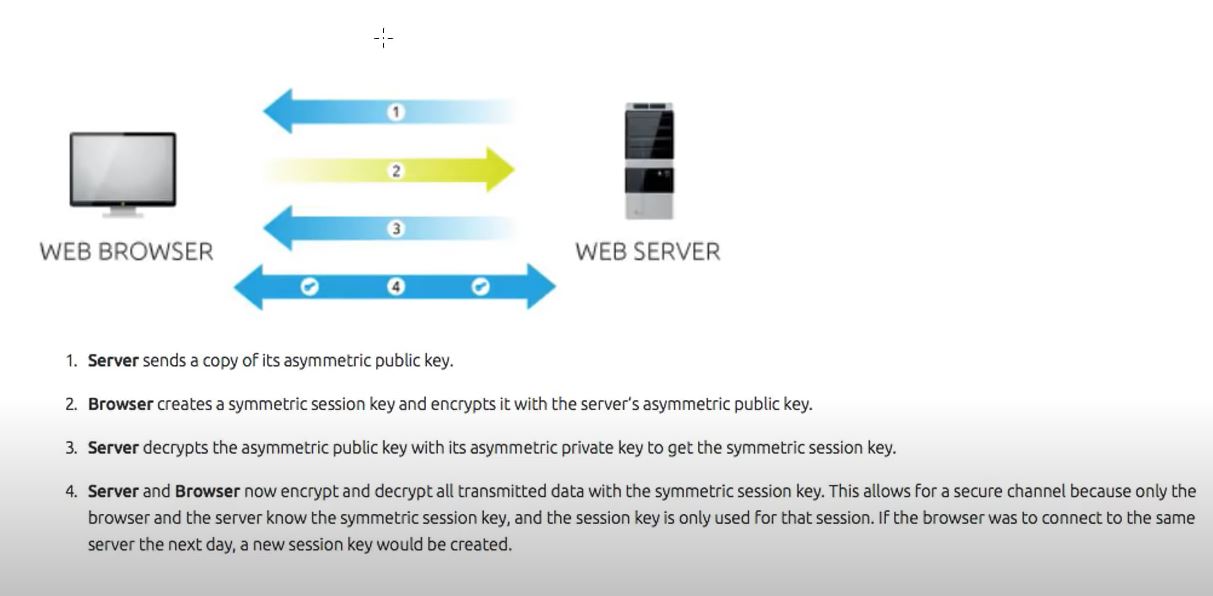

Communication over HTTPS(HTTP over Secure Socket Layer)

- SSL is web servers digital certificate offered by third party.Third party verifies the identity of the web server and its public key

- When you make a request to HTTPS website, the sites server sends a public key which is digitally signed certificate by third party or

Certificate Authority(CA)

- On receiving the certificate the browser sends the Certificate with public key to third party to check whether the certificate is valid

- After verifiying the certificate the browser creates a 2 symmetric keys, one is kept for browser and other for server. The key is sent by

encrypting using webservers public key. This encryted symmetric key is sent to server

- Web server uses its private key to decrypt. Now the communication happens using shared symetric key.

Typically, an applicant for a digital certificate will generate a key pair consisting of a private key and a public key, along with a certificate signing request (CSR)(Step1). A CSR is an encoded text file that includes the public key and other information that will be included in the certificate (e.g. domain name, organization, email address, etc.). Key pair and CSR generation are usually done on the server or workstation where the certificate will be installed, and the type of information included in the CSR varies depending on the validation level and intended use of the certificate. Unlike the public key, the applicant’s private key is kept secure and should never be shown to the CA (or anyone else).

After generating the CSR, the applicant sends it to a CA(Step2), who independently verifies that the information it contains is correct(Step3) and, if so, digitally signs the certificate with an issuing private key and sends it to the applicant.

When the signed certificate is presented to a third party (such as when that person accesses the certificate holder’s website), the recipient can cryptographically confirm the CA’s digital signature via the CA’s public key. Additionally, the recipient can use the certificate to confirm that signed content was sent by someone in possession of the corresponding private key, and that the information has not been altered since it was signed.