Finding Friends via map reduce

Link

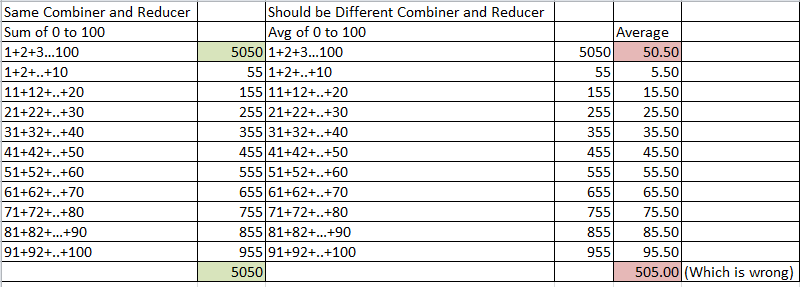

Difference between combiner and Reducer

- One constraint that a Combiner will have, unlike a Reducer, is that the input/output key and value types must match the output types of your Mapper.

- Combiners can only be used on the functions that are commutative(a.b = b.a) and associative {a.(b.c) = (a.b).c} . This also means that combiners may operate only on a subset of your keys and values or may not execute at all, still you want the output of the program to remain same.

- Reducers can get data from multiple Mappers as part of the partitioning process. Combiners can only get its input from one Mapper.

difference between Hdfs block and input split

- Block is the physical part of disk which has minimum amount of data that can be read or write. The actual size of block is decided during the design phase.For example, the block size of HDFS can be 128MB/256MB though the default HDFS block size is 64 MB.

- HDFS block are physically entity while Input split is logical partition.

- What is logical partition –> Logical partition means it will has just the information about blocks address or location. In the case where last record (value) in the block is incomplete,the input split includes location information for the next block and byte offset of the data needed to complete the record.

Counters Example Link

User Defined Counters Example

What is GenericOptionsParser

GenericOptionsParser is a utility to parse command line arguments generic to the Hadoop framework. GenericOptionsParser recognizes several standard command line arguments, enabling applications to easily specify a namenode, a ResourceManager, additional configuration resources etc.

The usage of GenericOptionsParser enables to specify Generic option in the command line itself

Eg: With Genericoption you can specify the following

>>hadoop jar /home/hduser/WordCount/wordcount.jar WordCount -Dmapred.reduce.tasks=20 input output

GenericOptionsParser vs ToolRunner

There’s no extra privileges, but your Command line options get run via the GenericOptionsParser, which will allow you extract certain configuration properties and configure a Configuration object from it

By using ToolRunner.run(), any hadoop application can handle standard command line options supported by hadoop. ToolRunner uses GenericOptionsParser internally. In short, the hadoop specific options which are provided command line are parsed and set into the Configuration object of the application.

eg. If you say:

>>hadoop MyHadoopApp -D mapred.reduce.tasks=3

Then ToolRunner.run(new MyHadoopApp(), args) will automatically set the value parameter mapred.reduce.tasks to 3 in the Configuration object.

Basically rather that parsing some options yourself (using the index of the argument in the list), you can explicitly configure Configuration properties from the command line:

hadoop jar myJar.jar com.Main prop1value prop2value

public static void main(String args[]) {

Configuration conf = new Configuration();

conf.set("prop1", args[0]);

conf.set("prop2", args[1]);

conf.get("prop1"); // will resolve to "prop1Value"

conf.get("prop2"); // will resolve to "prop2Value"

}

Becomes much more condensed with ToolRunner:

hadoop jar myJar.jar com.Main -Dprop1=prop1value -Dprop2=prop2value

public int run(String args[]) {

Configuration conf = getConf();

conf.get("prop1"); // will resolve to "prop1Value"

conf.get("prop2"); // will resolve to "prop2Value"

}

GenericOptionsParser, Tool, and ToolRunner for running Hadoop Job

Hadoop comes with a few helper classes for making it easier to run jobs from the command line. GenericOptionsParser is a class that interprets common Hadoop command-line options and sets them on a Configuration object for your application to use as desired. You don’t usually use GenericOptionsParser directly, as it’s more convenient to implement the Tool interface and run your application with the ToolRunner, which uses GenericOptionsParser internally:

public interface Tool extends Configurable {

int run(String [] args) throws Exception;

}

Below example shows a very simple implementation of Tool, for running the Hadoop Map Reduce Job.

public class WordCountConfigured extends Configured implements Tool {

@Override

public int run(String[] args) throws Exception {

Configuration conf = getConf();

return 0;

}

}

public static void main(String[] args) throws Exception {

int exitCode = ToolRunner.run(new WordCountConfigured(), args);

System.exit(exitCode);

}

We make WordCountConfigured a subclass of Configured, which is an implementation of the Configurable interface. All implementations of Tool need to implement Configurable (since Tool extends it), and subclassing Configured is often the easiest way to achieve this. The run() method obtains the Configuration using Configurable’s getConf() method, and then iterates over it, printing each property to standard output.

WordCountConfigured’s main() method does not invoke its own run() method directly. Instead, we call ToolRunner’s static run() method, which takes care of creating a Configuration object for the Tool, before calling its run() method. ToolRunner also uses a GenericOptionsParser to pick up any standard options specified on the command line, and set them on the Configuration instance. We can see the effect of picking up the properties specified in conf/hadoop-localhost.xml by running the following command:

>>hadoop WordCountConfigured -conf conf/hadoop-localhost.xml -D mapred.job.tracker=localhost:10011 -D mapred.reduce.tasks=n

Options specified with -D take priority over properties from the configuration files. This is very useful: you can put defaults into configuration files, and then override them with the -D option as needed. A common example of this is setting the number of reducers for a MapReduce job via -D mapred.reduce.tasks=n. This will override the number of reducers set on the cluster, or if set in any client-side configuration files. The other options that GenericOptionsParser and ToolRunner support are listed in Table.